AI Seminar: What can we learn from subtitled sign language data?

Speaker

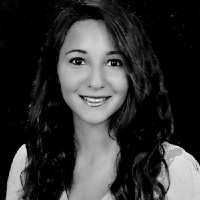

Gül Varol is a permanent researcher at the IMAGINE team of École des Ponts ParisTech. Previously, she was a postdoctoral researcher at the University of Oxford (VGG), working with Andrew Zisserman. She obtained her PhD from the WILLOW team of Inria Paris and École Normale Supérieure (ENS). Her thesis, co-advised by Ivan Laptev and Cordelia Schmid, received the ELLIS PhD Award. During her PhD, she spent time at MPI, Adobe, and Google. Prior to that, she received her BS and MS degrees from Boğaziçi University. Her research is focused on computer vision, specifically human understanding in videos, such as action recognition, body shape and motion analysis, and sign languages

Gül Varol is a permanent researcher at the IMAGINE team of École des Ponts ParisTech. Previously, she was a postdoctoral researcher at the University of Oxford (VGG), working with Andrew Zisserman. She obtained her PhD from the WILLOW team of Inria Paris and École Normale Supérieure (ENS). Her thesis, co-advised by Ivan Laptev and Cordelia Schmid, received the ELLIS PhD Award. During her PhD, she spent time at MPI, Adobe, and Google. Prior to that, she received her BS and MS degrees from Boğaziçi University. Her research is focused on computer vision, specifically human understanding in videos, such as action recognition, body shape and motion analysis, and sign languages

Abstract

Research on sign language technologies has suffered from the lack of data to train machine learning models. This talk will describe our recent efforts on scalable approaches to automatically annotate continuous sign language videos with the goal of building a large-scale dataset. In particular, we leverage weakly-aligned subtitles from sign interpreted broadcast footage. These subtitles provide us candidate keywords to search and localise individual signs. To this end, we develop three sign spotting techniques (i) using mouthing cues at the lip region, (ii) looking up videos from sign language dictionaries, and (iii) exploring the sign localisation that emerges from the attention mechanism of a sequence prediction model. With these methods, we build the BBC-Oxford British Sign Language Dataset (BOBSL), continuous signing videos of more than a thousand hours, containing millions of sign instance annotations from a large vocabulary. More information about the dataset can be found at https://arxiv.org/abs/2111.03635.

This seminar is a part of the AI Seminar Series organised by SCIENCE AI Centre. The series highlights advances and challenges in research within Machine Learning, Data Science, and AI. Like the AI Centre itself, the seminar series has a broad scope, covering both new methodological contributions, ground-breaking applications, and impacts on society.